Research highlights

2021:

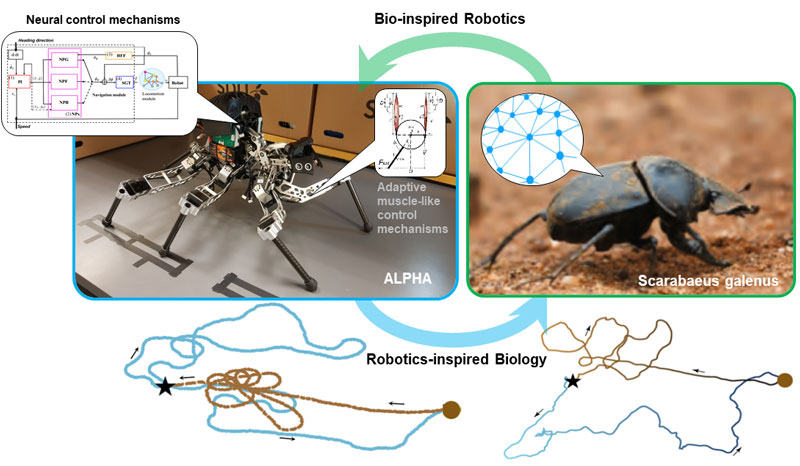

No need for landmarks: An embodied neural controller for robust insect-like navigation behaviors

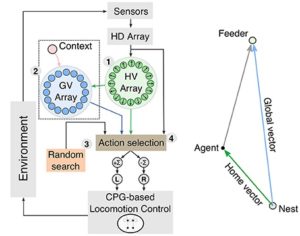

Dung beetles (Scarabaeus galenus) are tiny animals capable of self-localization, foraging, home searching, and transferring large dung balls. Existing knowledge is still insufficient to decode neural control mechanisms underlying these complex behaviors. To address this, we used bio-inspired robotics to develop “embodied” neural control mechanisms for a dung beetle robot. The developed control mechanisms are based on a modular structure consisting of navigation and locomotion modules. The navigation module controls the heading direction of various insect-like behaviors (i.e., random foraging, goal-directed navigation, backward homing, and home searching) with minimal information (i.e., heading angle and speed) rather than global positioning and landmark guidance. A special network with only three sign, Gaussian, and tangent (SGT) neurons inside the module allows for accurate goal-directed navigation, when subjected to sensory noise (e.g., variable sensing errors) and constrained navigation (e.g., fixed sensing errors), thereby facilitating self-localization accuracy. In parallel, the locomotion module based on a central pattern generator (CPG) and premotor neural networks coordinates the 18 joints of the robot and generates various dung beetle/insect-like gaits. We successfully demonstrated the performance of the control mechanisms for real-time indoor and outdoor robot navigation without any landmark and cognitive map (video), For more details, see Xiong and Manoonpong, IEEE Transactions on Cybernetics, 2021.

2019:

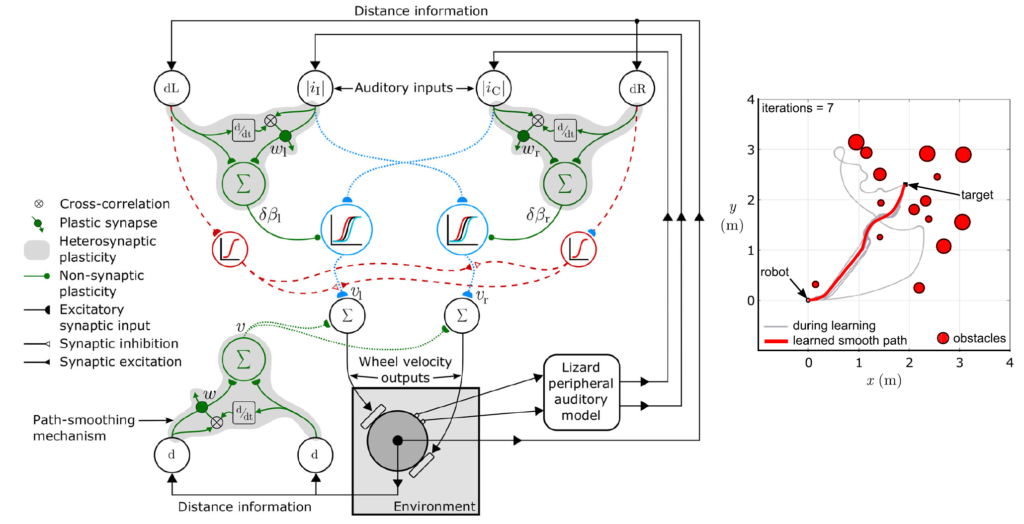

Neuroplasticity-inspired neural circuit for acoustic navigation with obstacle avoidance

We developed an adaptive neural circuit for reactive navigation with obstacle avoidance embodied in the environment. The neural circuit can generate smooth motion paths for a simulated mobile robot. This allows the robot to learn to smoothly navigate towards a virtual sound source while avoiding randomly placed obstacles in the environment. For more details, see Shaikh and Manoonpong, Neural Computing and Applications, 2019.

2017:

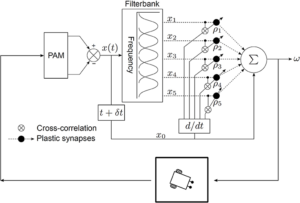

Adaptive neural acoustic-based navigation

The adaptive navigation system employs only two microphones with a neural mechanism coupled with a model of the peripheral auditory system of lizards (i.e., Lizard ear model). The peripheral auditory model provides sound direction information which the neural mechanism uses to learn the target’s velocity via fast correlation-based unsupervised learning. For more details, see Shaikh and Manoonpong, Front. Neurorobot., 2017.

Neural path integration*

The neural path integration mechanism is for homing behavior and associative goal learning in autonomous robots. The mechanism is fed by inputs from an allothetic compass and an odometer. The home vector is computed and represented in circular arrays of neurons where heading angles are population-coded and linear displacements are rate-coded. Incoming signals are sustained through leaky neural integrator circuits and compute the home vector by local excitation-lateral inhibition interactions. This neural mechanism has been tested on a simulated hexapod robot allowing it to navigate to multiple goals and autonomously return home. The mechanism reproduces various aspects of insect navigation. For more details, see Goldschmidt et al., Front. Neurorobot., 2017.