We have investigated utilizing neural dynamics and machine learning for locomotion control. According to this, various neural control techniques have been developed.

Research highlights

2022:

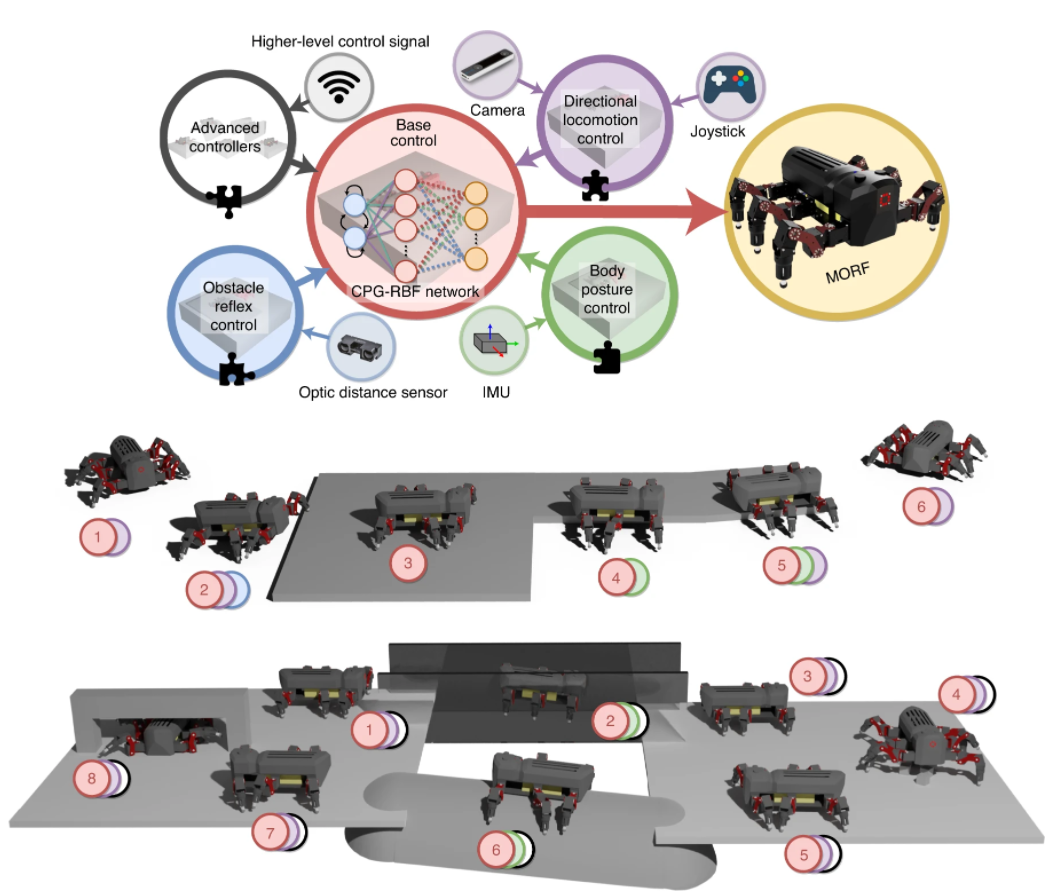

Versatile modular neural locomotion control with fast learning

Inspired by animal locomotion control, we propose simple yet versatile modular neural control with fast learning for legged robots. This control approach is scalable, analyzable, and robust against sensory faults, and its learning process is faster than state-of-the-art locomotion controllers. Using this approach, a hexapod robot can adaptively climb steps and between two walls, as well as walk on a pipe, through a narrow space, and over uneven terrain. The approach will be used as a basis for autonomous lifelong learning for versatile robot skill acquisition (video). For more details, see Thor, M., Manoonpong P. Nature Machine Intelligence, https://doi.org/10.1038/s42256-022-00444-0, 2022

2021:

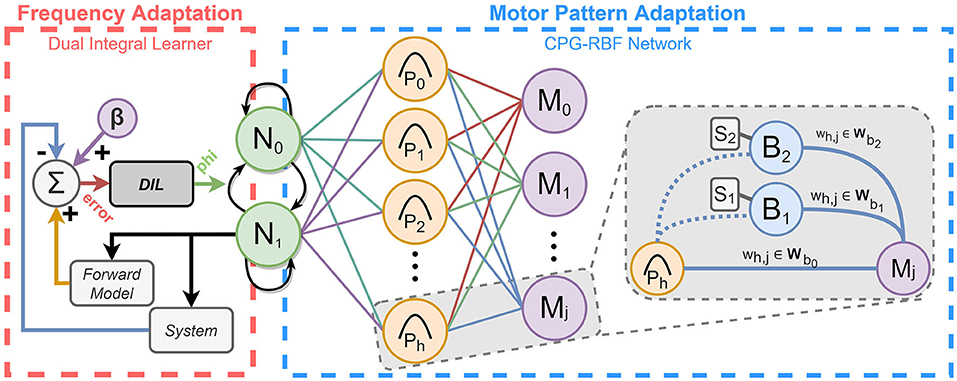

Locomotion control with frequency and motor pattern adaptations

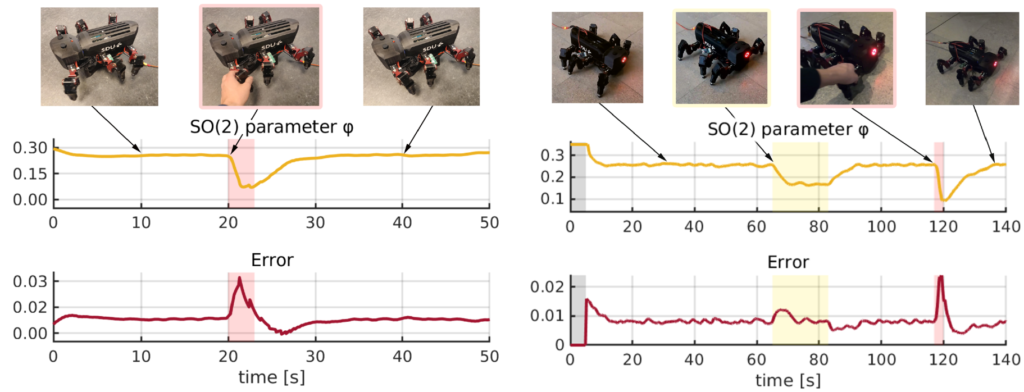

We present a central pattern generator (CPG) based locomotion controller integrating both a frequency and motor pattern adaptation mechanisms. We use the state-of-the-art Dual Integral Learner for frequency adaptation, which can automatically and quickly adapt the CPG frequency, enabling the entire motor pattern or output signal of the CPG to be followed at a proper high frequency with low tracking error. Consequently, the legged robot can move with high energy efficiency and perform the generated locomotion with high precision. The versatile state-of-the-art CPG-RBF network is used as a motor pattern adaptation mechanism. Using this network, the motor patterns or joint trajectories can be adapted to fit the robot’s morphology and perform sensorimotor integration enabling online motor pattern adaptation based on sensory feedback. In general, the frequency and motor pattern mechanisms complement each other well and their combination can be seen as an essential step toward further studies on adaptive locomotion control (Video 1). For more details, see Thor M., Strohmer B., Manoonpong P. Front. Neural Circuits, 2021.

2020:

General distributed neural control and sensory adaptation

We introduce a fast learning mechanism based on a biological serotonergic system for online sensory adaptation. It can continuously adjust the strength of sensory pathways, thereby introducing flexible plasticity into the connections between sensory feedback and neural control circuits. We combine the sensory adaptation mechanism with distributed neural control circuits to acquire the adaptive and robust interlimb coordination of walking robots. This novel approach is also general and flexible. It can automatically adapt to different walking robots and allow them to perform stable self-organized locomotion as well as quickly deal with damage within a few walking steps. We validated our adaptive interlimb coordination approach with continuous online sensory adaptation on simulated 4-, 6-, 8-, and 20-legged robots. For more details, see Miguel-Blanco A. and Manoonpong P. Front. Neural Circuits 14:46. doi: 10.3389/fncir.2020.00046, 2020.

Generic neural locomotion control framework

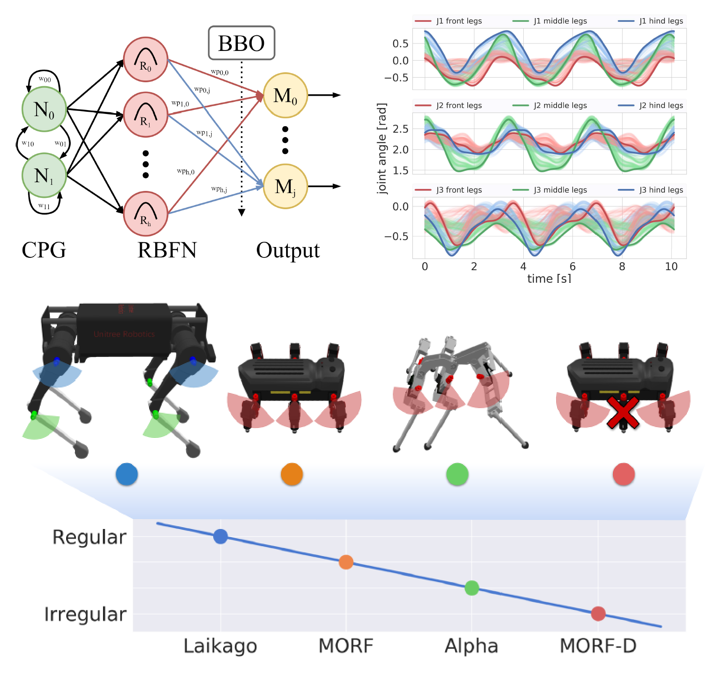

We present a generic locomotion control framework for legged robots and a strategy for control policy optimization. The framework is based on neural control and black-box optimization. The neural control combines a central pattern generator (CPG) and a radial basis function (RBF) network to create a CPG-RBF network. The control network acts as a neural basis to produce arbitrary rhythmic trajectories for the joints of robots. For more details, see Thor et al., IEEE Transactions on Neural networks and learning systems, 2020.

Video1.1, Video 1.2 (Laikago), Video2.1, Video 2.2 (Alpha), Video3.1 , Video 3.2 (MORF) , Video 4 (Real robot)

2019:

Fast online frequency adaptation for CPG-based robot motion control

We present adaptive neural Central Pattern Generator (CPG) based locomotion control of a hexapod robot. The control mechanism works by modulating the CPG frequency through synaptic plasticity of the neural CPG

network. The modulation is based on tracking error feedback between the CPG output and joint angle sensory feedback of the hexapod robot. As a result, the mechanism will always try to match the CPG frequency to the walking performance of the robot, thereby ensuring that the entire generated trajectory can be followed with low tracking error. Real robot experiments show that our mechanism can automatically generate a proper

walking frequency for energy-efficient locomotion with respect to the robot body as well as being able to quickly adapt the frequency online within a few seconds to deal with external perturbations such as leg blocking and a variation in electrical power. For more details, see Thor and Manoonpong , IEEE Robotics and Automation Letters, Volume: 4 , Issue: 4 , pp. 3324 – 3331 DOI: 10.1109/LRA.2019.2926660, 2019

2016:

Adaptive combinatorial neural control

![]()

We develop the adaptive combinatorial neural control circuit for for robust locomotion of a biped robot. The circuit consists of reflex-based and central pattern generator (CPG)-based mechanisms. The reflex-based control mechanism basically generates energy-efficient bipedal locomotion while the CPG-based mechanism with synaptic plasticity ensures robustness against loss of global sensory feedback (e.g., foot contact sensors) as well as allows for adaptation within a few steps to deal with environmental changes. We have successfully applied our control approach to the biomechanical bipedal robot DACBOT. For more details, see Di Canio et al.,SAB, 2016.

2014:

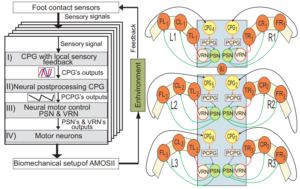

Multiple CPGs-based control with local feedback*

We develop a locomotion control strategy based on distributed central pattern generators (CPGs), local sensory feedback, and their interactions during body and leg movements through the environment. Based on this, we can generate self-organizing emergent locomotion allowing a hexapod robot to adaptively form regular patterns, to stably walk while pushing an object with its front legs or performing multiple stepping of the front legs, to deal with morphological change, and to synchronize its movement with another robot during a collaborative task. For more details, see Barikhan et al., SAB, 2014.

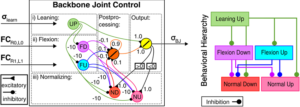

Adaptive climbing control*

Adaptive climbing control combines locomotion control, backbone joint control, local leg reflexes, and neural learning. While the first three components generate locomotion including walking and climbing, the neural learning mechanism allows the robot to adapt its behavior for obstacle negotiation with respect to changing conditions, e.g., variable obstacle heights and different walking gaits. The adaptive climbing control was developed and tested first on a physical robot simulation, and was then successfully transferred to a real hexapod robot, called AMOS II. The results show that the robot can efficiently negotiate obstacles with a height up to 85% of the robot’s leg length in simulation and 75% in a real environment. For more details, see Goldschmidt et al., Front. Neurorobot, 2014.

2013:

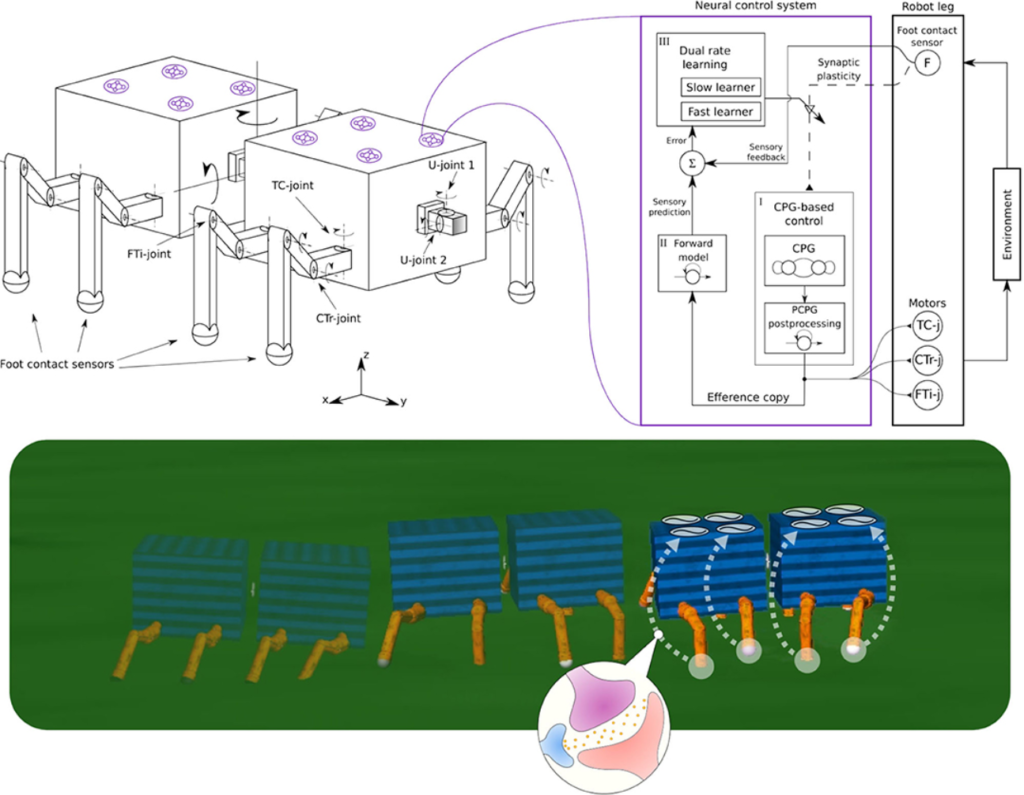

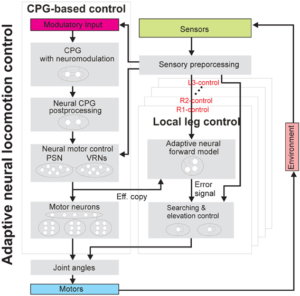

Adaptive modular neural locomotion control*

We develop a series of modular neural locomotion control where the latest version consisting of a single CPG mechanism with neuromodulation and local leg control mechanisms based on sensory feedback and adaptive neural forward models with efference copies. This neural closed-loop controller enables a walking machine to perform a multitude of different walking patterns including insect-like leg movements and gaits as well as energy-efficient locomotion. In addition, the forward models allow the machine to autonomously adapt its locomotion to deal with a change of terrain, losing of ground contact during stance phase, stepping on or hitting an obstacle during swing phase, leg damage, and even to promote cockroach-like climbing behavior. Such an employed embodied neural closed-loop system can be a powerful way for developing robust and adaptable machines. For more details, see Manoonpong et al., Front. Neural Circuits, 2013.

2010:

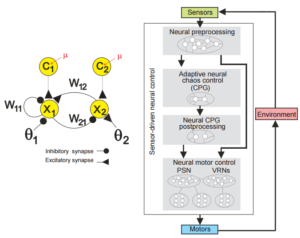

Neural chaos control*

We develop a chaotic pattern generator based on a two-neurons system and applied it to control our hexapod robot AMOS. Employing this single chaotic pattern generator, we can achieve 11 basic behavioural patterns (for example, orienting, taxis, self-protection and various gaits) and their combinations for AMOS. These complex behaviors allow AMOS to successfully navigate through a complex environment. Moreover, using this controller the robot can not only react to environmental stimuli but also learn to find its behaviourally useful motor responses for different situations. Besides this, we also extended this control to six chaotic pattern generators, each of which controls each legs of the robot. By doing so, the robot can maintain the body balance and compensate for leg damage. Thus, such a neural control mechanism provides a powerful yet simple way to self-organize versatile behaviours in autonomous agents with many degrees of freedom. For more details, see Steingrube et al., Nature Physics, 2010.

Past:

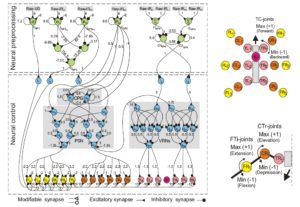

Modular neural control*

We develop the modular neural controller of the walking machines is modeled with an artificial neural network using discrete-time dynamics. Part of it is developed by realizing dynamical properties of recurrent neural networks. The controller is designed as a modular structure composed of two main modules: the modular neural control and the neural sensory preprocessing networks. The modular neural control, based on a CPG, generates omnidirectional walking and drives the reflex behavior while the neural preprocessing networks filter sensory noise as well as shape the sensory data for activating an appropriate reactive behavior, e.g., a self-protective reflex, escape behavior, and obstacle avoidance behavior. The presented neuromodules are small so that their structure-function relationship can be analysed. The complete controller is general in the sense that it can be easily adapted to different types of even-legged walking machines without changing its internal structure and parameters. For more details, see Manoonpong et al., RAS, 2008, Manoonpong et al., IJRR, 2007.