Research highlights

2019:

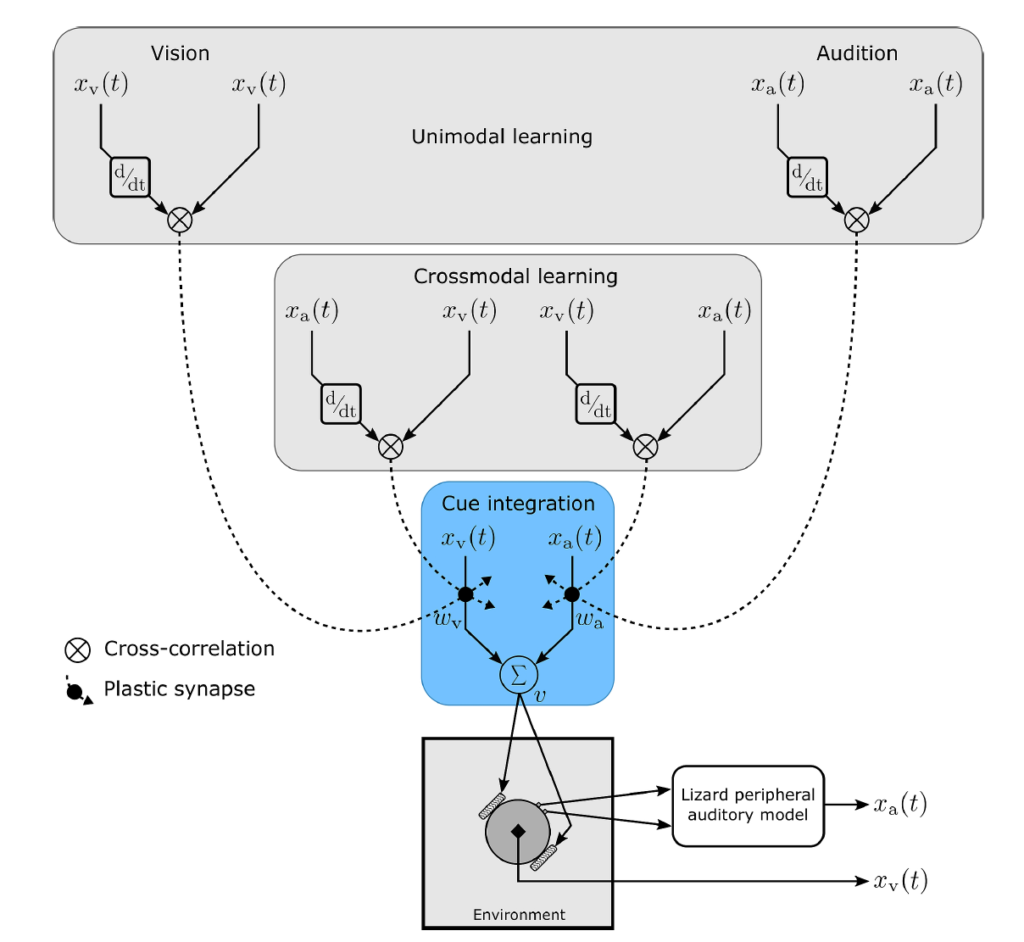

Concurrent intramodal learning enhances multisensory responses of symmetric crossmodal learning in robotic audio-visual tracking

We present a Hebbian learning-based adaptive neural circuit for multi-modal cue integration. The circuit temporally correlates stimulus cues within each modality via intramodal learning as well as symmetrically across modalities via crossmodal learning to independently update modality-specific neural weights on a sample-by-sample basis. It is realized as a robotic agent that must orient towards a moving audio-visual target. It

continuously learns the best possible weights required for a weighted combination of auditory and visual-spatial target directional cues that is directly mapped to robot wheel velocities to elicit an orientation response. For more details see Shaikh et al, Cognitive Systems Research, 2019.

2018:

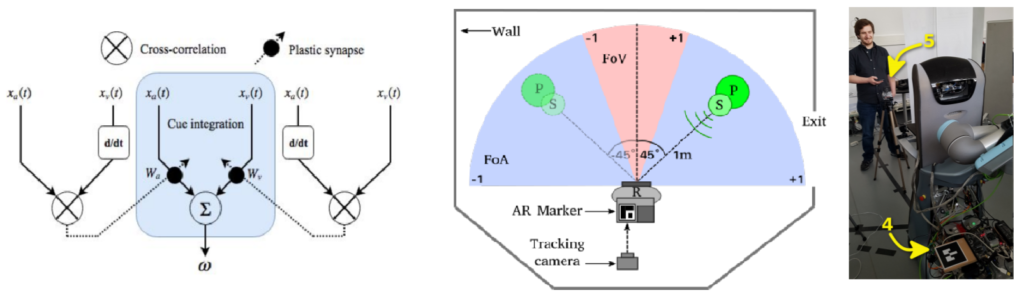

Crossmodal Learning for Smooth Multimodal Attention Orientation

We present an adaptive neural circuit for multisensory attention orientation that combines auditory and visual directional cues. The circuit learns to integrate sound direction cues, extracted via a model of the peripheral auditory system of lizards, with visual directional cues via deep learning-based object detection. We implement the neural circuit on a robot and demonstrate that integrating multisensory information via the circuit generates appropriate motor velocity commands that control the robot’s orientation movements. For more details see Haarslev et al., Proceedings of the 10th International Conference on Social Robotics, Lecture Notes in Computer Science, 2018.

2017:

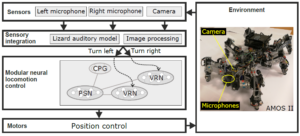

Multisensory locomotion control

We develop a multisensory locomotion control architecture that uses visual feedback to adaptively improve acoustomotor orientation response of the hexapod robot AMOS II. The robot is tasked with localising an audio-visual target by turning towards it. The circuit uses a model of the peripheral auditory system of lizards to extract sound direction information to modulate the parameters of locomotor central pattern generators driving the turning behaviour. The visual information adaptively changes the strength of this acoustomotor coupling to adjust turning speed of the robot. For more details see Shaikh et al, CLAWAR, 2017.