Research highlights

2019:

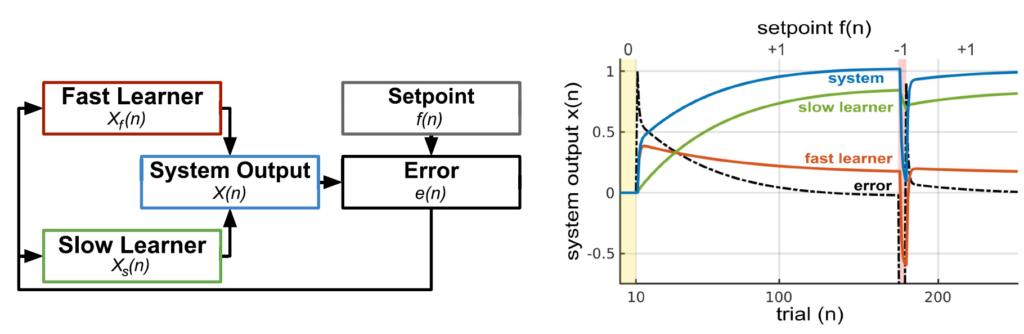

Error-based learning mechanism for fast online adaptation

We developed online error-based learning for the fast adaptation of robot control. The learning mechanism used for error reduction is a novel modification of the dual learner (DL) called dual integral learner (DIL). Being able to reduce tracking and steady-state errors, it can also perform fast and stable learning, adapting the control parameter(s) to match the performance of robotic systems. Meta parameters of the DIL are more straightforward for complex systems (like walking robots), compared to traditional correlation-based learning, since they correspond to error reduction. Due to its embedded memory, the DIL can relearn quickly and recover spontaneously from the previously learned parameters. For more details, see Thor et al., IEEE Transactions on Neural networks and learning systems, 2019.

2014:

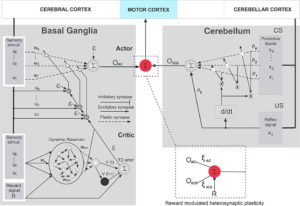

Combinatorial learning with reward modulated heterosynaptic plasticity*

We have extended the combinatorial learning mechanism by adding bio-inspired reward modulated heterosynaptic plasticity (RMHP) rule and applying a recurrent neural network based-critic model to continuous actor-critic reinforcement learning. We clearly demonstrate the novel adaptive mechanism, leads to stable, robust and fast learning of goal-directed behaviors. For more details, see Dasgupta et al.,Front. Neural Circuits, 2014.

2013:

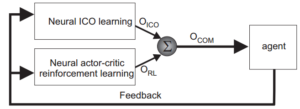

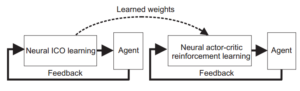

Combinatorial learning*

Combinatorial learning is a novel learning model based on classical conditioning and operant conditioning. Specifically, this model combines correlation-based learning using input correlation learning (ICO learning) and reward-based learning using continuous actor-critic reinforcement learning (RL), thereby working as a dual learner system. The model can be use to effectively solve a dynamic motion control problem as well as a goal-directed behavior control problem. For more details, see Manoonpong et al.,Advs. Complex Syst., 2013.

Self-adaptive reservoir temporal memory*

Self-adaptive reservoir temporal memory is generated by a self-adaptive reservoir network. The network is based on reservoir computing with intrinsic plasticity (IP) and timescale adaptation. The memory model was successfully used for the complex navigation of the hexapod robot AMOSII. Besides this, it was also employed as an embedded temporal memory in a Critic of Actor-Critic reinforcement learning. This results in adaptive and robust robot behavior. For more details, see Dasgupta et al., Evolving Systems, 2013.